AI and Coding

Technical details for how to use the latest AI tools

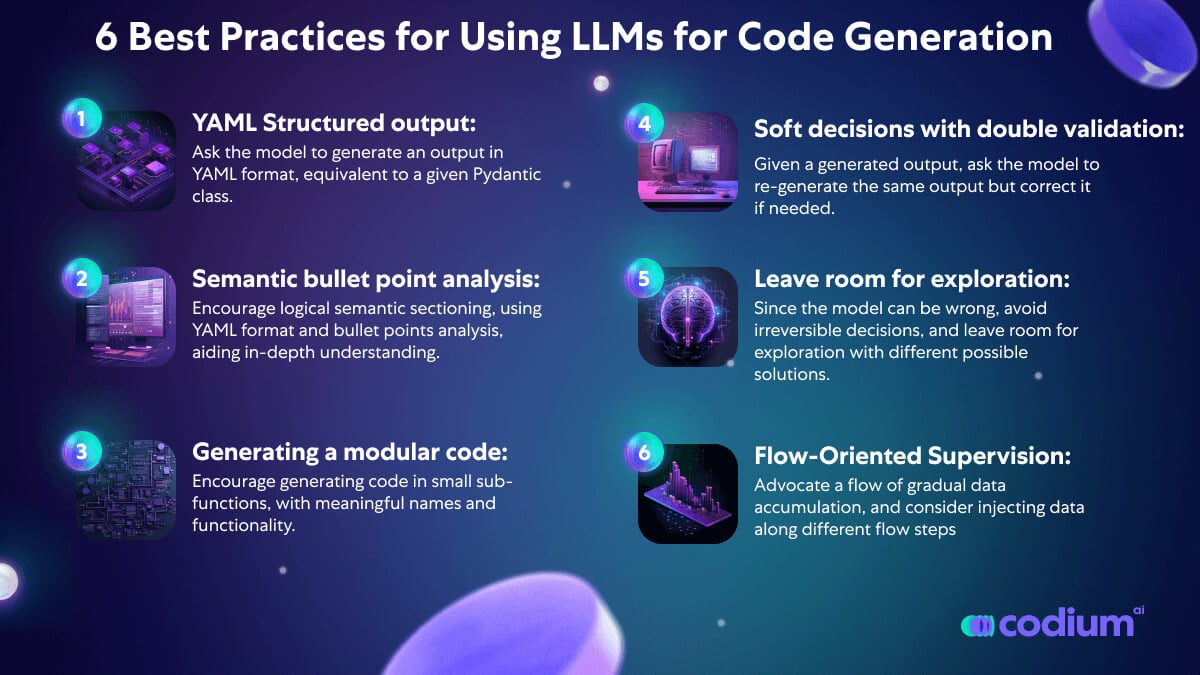

AlphaCodium : a test-based, multi-stage, code-oriented iterative flow, that improves the performances of LLMs on code problems. See their blog entry

DeepEval is like PyTest only for LLM apps.

Switch from OpenAI to Open Source using PostgresML Switch Kit

import pgml

client = pgml.OpenSourceAI()

results = client.chat_completions_create(

"HuggingFaceH4/zephyr-7b-beta",

[

{

"role": "system",

"content": "You are a friendly chatbot who always responds in the style of a pirate",

},

{

"role": "user",

"content": "How many helicopters can a human eat in one sitting?",

},

],

temperature=0.85,

)

print(results)StarCoder is an Alternative to Code Pilot from HuggingFace and ServiceNow.

Use LLMs as functions > When slotting in language models to your code base, a useful mental model is treating them like functions with standard input and output. This blog, from the creator of React Native, shows a few ways you can do that and the benefits of modeling your models this way

const prompt = 'Build a chat app UI';

const components = llm<Array<string>>(

'You only have the following components: ' +

designSystem.getAllExistingComponents().join(', ') + '\n' +

'What components to do you need to do the following:\n' +

prompt

);

// ['List', 'Card', 'ProfilePicture', 'TextInput']

const result = llm<{javascript: string, css: string}>(

'You only have the following components: ' +

components.join(',') + '\n' +

'Here are examples of how to use them:\n' +

components.map(component =>

designSystem.getExamplesForComponent(component).join('\n')

).join('\n') + '\n' +

'Write code for making the following:\n' +

prompt

);

// { javascript: '...', css: '...' }Build LLM apps in the browser with Ollama. See Vercel implementation.

Magentic is a Python library that lets you incorporate an LLM easily into regular code.

from magentic import prompt

@prompt('Add more "dude"ness to: {phrase}')

def dudeify(phrase: str) -> str:

... # No function body as this is never executed

dudeify("Hello, how are you?")

# "Hey, dude! What's up? How's it going, my man?"Study a code repo with SolidGPT.

GPT Pilot > You specify what kind of app you want to build. Then, GPT Pilot asks clarifying questions, creates the product and technical requirements, sets up the environment, and starts coding the app step by step, like in real life, while you oversee the development process. It asks you to review each task it finishes or to help when it gets stuck. This way, GPT Pilot acts as a coder while you are a lead dev who reviews code and helps when needed.

YouAI lets you build custom apps with full-blown workflows, including chat interfaces, summarizers, analyzers, and document editors, plus trained with external data.

Wired says YouAI developed Book AI, being used by Solution Tree and other publishers to make an interactive way to talk to a book.

ChatGPT-AutoExpert is a set of detailed prompts to get better code out of ChatGPT.

SudoLang is a programming language designed to collaborate with AI language models including ChatGPT, Bing Chat, Anthropic Claude, and Google Bard. It is designed to be easy to learn and use. It is also very expressive and powerful. A typical code sample includes a short natural language description, a few variable constructs, and a set of flags to change the output.

Stas Bekman’s Machine Learning Engineering Guides and Tools: An open collection of methodologies to help with successful training of large language models and multi-modal models.

Factory “Your Coding Droid”

Unlike existing products, Factory’s droids are hands-off – they can review code, address bugs and answer questions independently.

Poolside (via “The Generalist”)

OpenAI foundation-model approach but focusing on only one capability: code generation. Their technical strategy hinges on the fact that code can be executed, allowing for immediate and automatic feedback during the learning process.)

LLM is a command-line utility that lets you talk to OpenAI and other LLMs.

A CLI utility and Python library for interacting with Large Language Models, including OpenAI, PaLM and local models installed on your own machine.

OSSChat is Open Source chatbot

I tried it with “what libraries exist to help me study my freestyle libre cgm results?” and it returned a bunch of results, but no obvious way to link to the response.

Kaguya an open-sourced ChatGPT plugin that can access your filesystem to read and write python project files while you code.

Building Large Language Models

Getting Started with Transformers and GPT

what StackOverflow thinks about ChatGPT

Sample Applications

See My Sample Apps for details of one that I built.

ShowHN: BBC “In Our Time”, categorised by Dewey Decimal, heavy lifting by GPT

How to Develop

A16Z published AI Companion App (based on AI Getting Started template)

This is a tutorial stack to create and host AI companions that you can chat with on a browser or text via SMS. It allows you to determine the personality and backstory of your companion, and uses a vector database with similarity search to retrieve and prompt so the conversations have more depth. It also provides some conversational memory by keeping the conversation in a queue and including it in the prompt.

How to build a ChatGPT + Google Drive app with LangChain and Python: “How to use ChatGPT with your Google Drive in 30 lines of Python.”

from langchain.chat_models import ChatOpenAI

from langchain.chains import RetrievalQA

from langchain.document_loaders import GoogleDriveLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import Chroma

folder_id = "YOUR_FOLDER_ID"

loader = GoogleDriveLoader(

folder_id=folder_id,

recursive=False

)

docs = loader.load()

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=4000, chunk_overlap=0, separators=[" ", ",", "\n"]

)

texts = text_splitter.split_documents(docs)

embeddings = OpenAIEmbeddings()

db = Chroma.from_documents(texts, embeddings)

retriever = db.as_retriever()

llm = ChatOpenAI(temperature=0, model_name="gpt-3.5-turbo")

qa = RetrievalQA.from_chain_type(llm=llm, chain_type="stuff", retriever=retriever)

while True:

query = input("> ")

answer = qa.run(query)

print(answer)Playing with Streamlit and LLMs details of how to customize an LLM to answer questions about a blog.

A tweet thread from @matchaman11 on how to train a chatbot on a Notion database.

Build Your Own ChatBot with GPT-3 and Lenny Chatbot

Stanford Alpaca, “which aims to build and share an instruction-following LLaMA model” (and HN)

Seattle’s AI2 Incubator (Vu Ha) on Github keeps a sample AI2I_Webapp with docker and AWS deployment information

Build Your Own LLM

Large Language Model Course is divided into three parts:

- 🧩 LLM Fundamentals covers essential knowledge about mathematics, Python, and neural networks.

- 🧑🔬 The LLM Scientist focuses on learning how to build the best possible LLMs using the latest techniques

- 👷 The LLM Engineer focuses on how to create LLM-based solutions and deploy them.

Early Access Manning book on Build a Large Language Model (From Scratch) Github: @raspt

Transformers from Scratch Matt Diller’s step-by-step, with Colab

Building Large Language Models

GPT4All is a Github repo of open-source chatbots trained on a massive collections of clean assistant data including code, stories and dialogue

and a simple step-by-step tutorial of how to use it with LangChain

Karpathy’s NanoGPT is

The simplest, fastest repository for training/finetuning medium-sized GPTs. It is a rewrite of minGPT that prioritizes teeth over education. Still under active development, but currently the file train.py reproduces GPT-2 (124M) on OpenWebText, running on a single 8XA100 40GB node in about 4 days of training. The code itself is plain and readable: train.py is a ~300-line boilerplate training loop and model.py a ~300-line GPT model definition, which can optionally load the GPT-2 weights from OpenAI. That’s it.

via HN Discussion and more

Healthtech

Tools

Code Editing

Meta Code Llama is an LLM optimized for coding.

llama.cpp will run using 4-bit integer quantization on a MacBook.

The Manifesto outlines the project to make local LLMs.

Google launches Project IDX an AI-enabled browser-based development environment for building full-stack web and multiplatform apps.

Stability AI and StableCode

- based on BigCode

- long-context-window version has a context window of 16,000 tokens

- Rather than using the ALiBi (Attention with Linear Biases) approach to position outputs in a transformer model — the approach used by StarCoder for its open generative AI model for coding — StableCode is using an approach known as rotary position embedding (RoPE).

see HN for mixed reviews.

Lets you ask coding questions with a 6000 character limit.

Upgraded to Phind-70B CodeLlama-70B model and is fine-tuned on an additional 50 billion tokens, yielding significant improvements. It also supports a context window of 32K tokens.

available to try for free and without a login.

Cursor.so: The AI-first Code Editor: Build software faster in an editor designed for pair-programming with AI

Vector Databases

Pinecone is a vector search platform.

LanceDB is an open-source database for vector-search built with persistent storage, which greatly simplifies retrevial, filtering and management of embeddings.

The key features of LanceDB include:

Production-scale vector search with no servers to manage.

Store, query and filter vectors, metadata and multi-modal data (text, images, videos, point clouds, and more).

Support for vector similarity search, full-text search and SQL.

Native Python and Javascript/Typescript support.

Zero-copy, automatic versioning, manage versions of your data without needing extra infrastructure.

Ecosystem integrations with LangChain 🦜️🔗, LlamaIndex 🦙, Apache-Arrow, Pandas, Polars, DuckDB and more on the way.

LanceDB’s core is written in Rust 🦀 and is built using Lance, an open-source columnar format designed for performant ML workloads.

Zilliz is a web-hosted version of the open source Milvus

Chroma: the AI-native open-source embedding database is integrated with PaLM embeddings.

Also see Vespa, Qdrant, Supabase, Chroma and this tweet

MiniChain

This chaining tool might be the first actually truly useful one for both prototyping and production. It uses function decorators and YAML templates in a clever and powerful way to enable chaining. Examples show that you can write a chat bot, vector database, and more in just 20 lines.

Langchain

Langchain, developed by Harrison Chase, is a Python and JavaScript library for interfacing with OpenAI’s GPT APIs (later expanding to more models) for AI text generation. More specifically, it’s an implementation of the paper ReAct: Synergizing Reasoning and Acting in Language Models published October 2022, colloquially known as the ReAct paper, which demonstrates a prompting technique to allow the model to “reason” (with a chain-of-thoughts) and “act” (by being able to use a tool from a predefined set of tools, such as being able to search the internet). This combination is shown to drastically improve output text quality and give large language models the ability to correctly solve problems.

and Langchain in Realworld, a detailed list of use cases and how to build them.

Max Woolf’s explanation of why LangChain isn’t necessary: “LangChain’s vaunted prompt engineering is just f-strings, a feature present in every modern Python installation, but with extra steps.”

(and see HN)

Issues

Vicki Boykis: What we don’t talk about when we talk about building AI apps a summary of some engineering issues, like how to handle the large Docker files, and build times.